Research

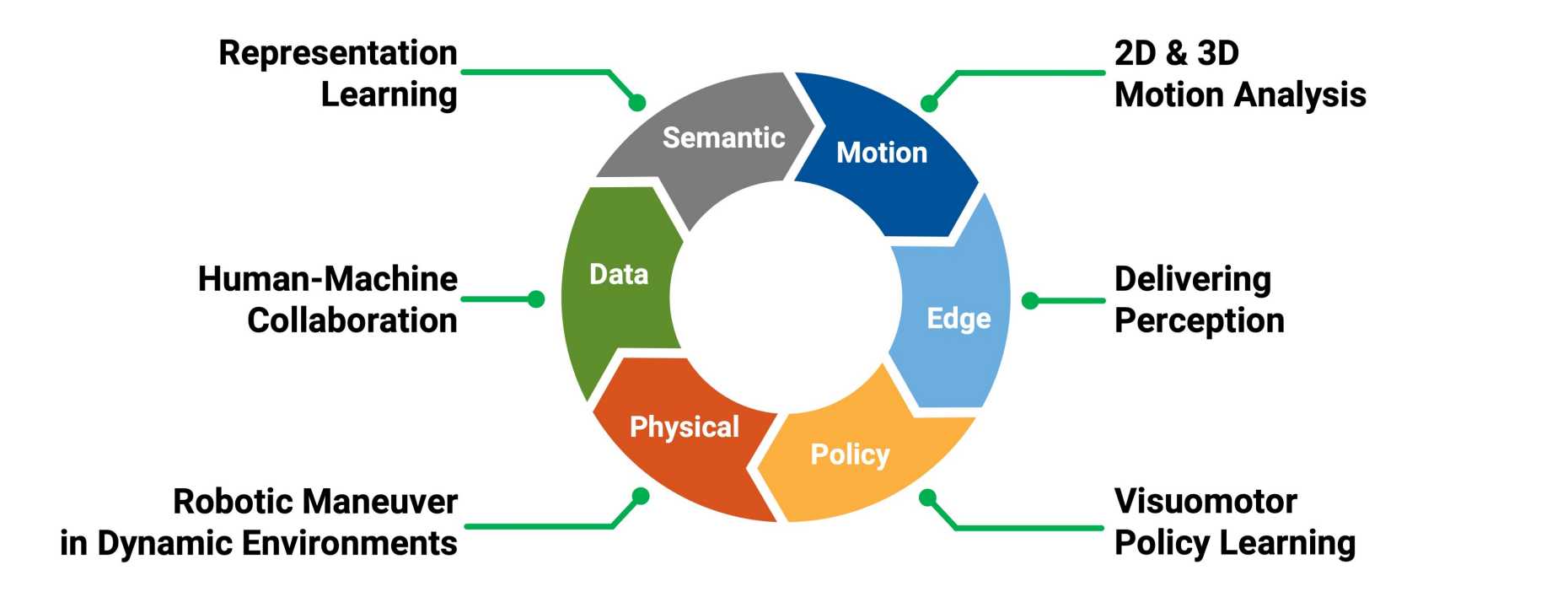

Our group focuses on learning representations for object recognition and motion understanding in images and videos, as well as building perceptual robotic and software systems based on the visual representations. Our team has developed influential algorithms, models, software, and datasets to push the scientific frontiers of fundamental computer vision problems and visual learning systems.

Representation Learning

The success of representation learning through deep convolutional networks has fueled the recent insurgence of interests and hypes in artificial intelligence. Our past research has become an integral part of this trend. Although we had made great achievements, we still need multiple disruptive advances in representation learning to reach human-level recognition capabilities. We continuously explore new operations, new structures, and new training methods to design universal architecture for representation learning to resolve the challenges of multi-task learning, data efficiency, and model generalizability. We will study the fundamental problems in computer vision such as object detection, segmentation, and image enhancement.

Algorithms for 2D and 3D motion analysis

Complex environments are usually filled with dynamic objects, but the foundation for tracking the objects is far weaker than others like object detection and semantic segmentation. It has hindered the development of perceptual control systems for autonomous driving and robotic manipulation. So we have been working on monocular 2D and 3D object tracking. Our current algorithms beat the previous state-of-the-art methods by 5 to 10 percent on multiple datasets. But much more research is still necessary for tracking every object in every environment without a large amount of human annotation. We will also explore applications of dynamic 3D scene understanding in VR/AR.

Real-world delivery of perception models

We have claimed great success in using ConvNets to build computer vision models, but we also meet great difficulties in using those models in real applications. We can formulate those problems in terms of efficient networks, few-shot learning, self-supervised learning, multitask learning, and domain adaptation. We are currently working on those problems from the "delivery" perspective.

Human-machine collaboration for large-scale data exploration

The future of AI is making our lives better. We study how to use AI to enhance human capabilities in performing complicated and time-consuming tasks. Our starting point is collaborative large-scale data processing and we aim to reduce the human efforts in labeling large scale datasets with help from machine learning models. We will study active learning and online learning for the structured tasks as well as the underlying software system to support these new types of model serving. We are especially looking for people with strong programming and system building skills to work on system research for real-time and low-latency human-machine collaboration.

Visuomotor reinforcement learning for motion and manipulation

An independent intelligent system is supposed to act on its own by sensing the environment and make action decisions. Rule-based systems usually fail to recognize the complexities of the real world and deficiencies of the internal components. We study the learning algorithms and architecture for control systems based on visual inputs. Our past research found that although they introduce additional learning complications, visual inputs can provide new supervisions and different model insights for reinforcement learning. We will continue those exciting findings and design effective end-to-end learning systems for driving and manipulation.

Robot interaction in dynamic environments

Nothing feels more real than the physical robots carrying our algorithms. We need to build the actual robots to collect training data and evaluate our algorithms. We are fortunate to get great support for building and running robots of various sizes and capabilities. We have a dedicated lab space (with air conditioning!) at ETZ to experiment with robotic arms and mobile platforms. We also have parking space to maintain our full-size cars with driving-by-wire capability. Several research directions are going on including replayable data collection, 3D localization and mapping, and hand-leg coordination.